In this post, we're looking at the bottom-right quadrant: the "Loonshots".

Loonshots were by far the most interesting quadrant to research. In most cases, they were well-intentioned efforts to improve the world in a meaningful way. Yet they are exactly the kind of ideas that can attract (and ultimately waste) billions of $ in investment and millions of smart-person-hours. That's why it’s worth trying to understand why they fail.

Mighty, and betting against the wrong trends

“The number of people betting against Moore’s Law doubles every year.”

Some paradigm-shifting ideas can be doomed from the beginning because they’re betting against a strong external trend.

A few years ago I heard about a startup called Mighty, which Paul Graham and others were promoting enthusiastically. They developed a $30/month remote, high-performance web browser (ad for it can be watched here). Mighty’s browser runs on a high-powered server instead of your computer, giving you really fast performance, even if you have Figma and Google Docs open in a hundred tabs.

The company is now dead. I think it was a technical paradigm shift (outsourcing your browser to a server), but not a paradigm shift in UX. The experience was undoubtedly faster, but was it radically different? I could be convinced that this is a faster horse rather than a loonshot. Most people will not pay $360/year for a faster web browser, when they could just buy a fast new MacBook for ~$1k and have every application be faster and better.

As the discussion in this Hacker News post points out, it was predictable at the time that the chips in personal computers were getting steadily faster each year (i.e, Moore’s Law). Every time a faster generation of laptops came out, it would erode Mighty’s value proposition. True, Mighty could always stay a few levels of performance ahead by upgrading its servers, but I think there are diminishing returns to making a browser faster. The days of the “pinwheel of death” or burning your lap (which are highlighted in Mighty’s commercial) are pretty much solved by how good new laptops are these days.

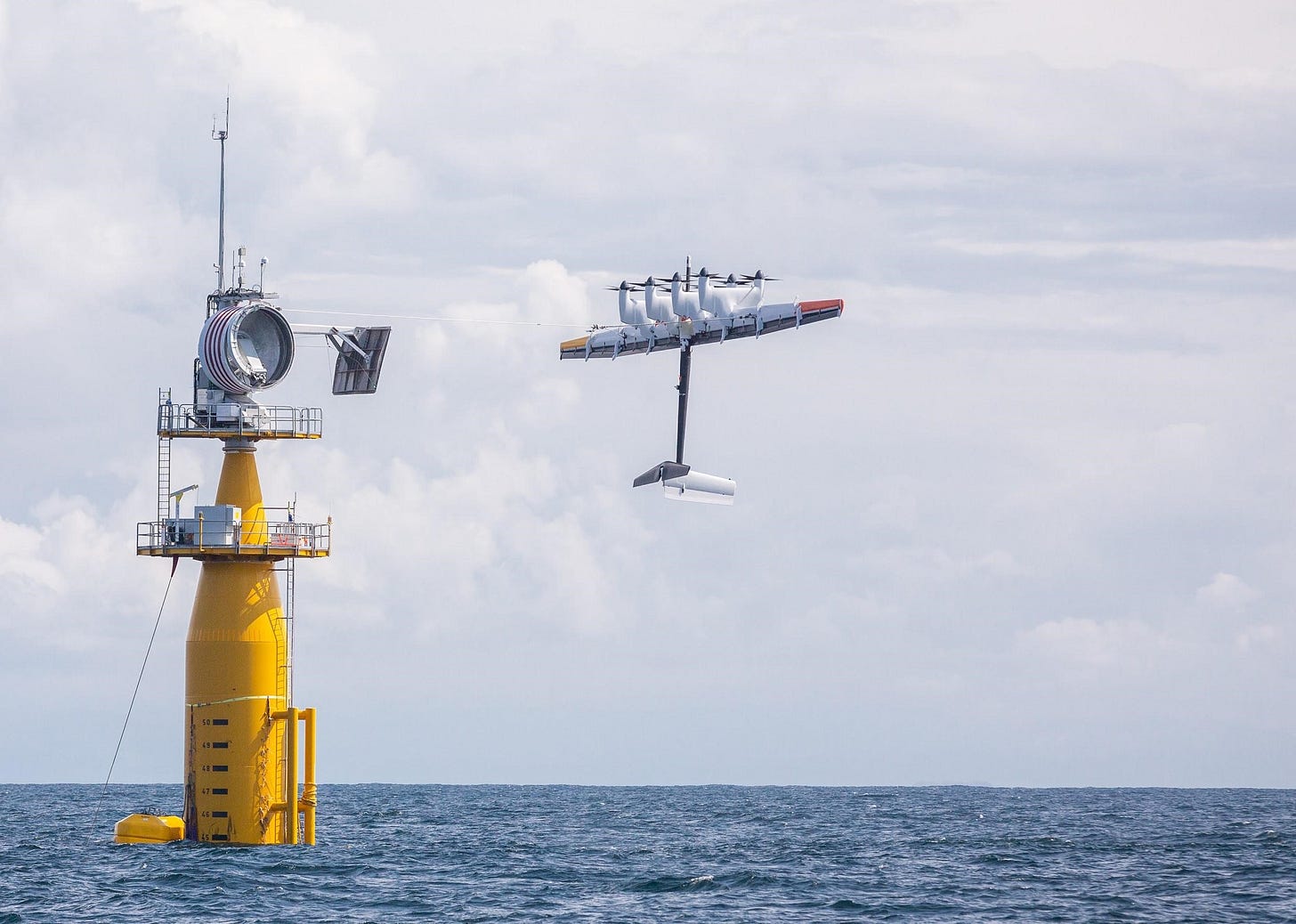

Makani

As Elon Musk famously said:

“One of the biggest traps for smart engineers is optimizing a thing that shouldn’t exist.”

Theory vs. Practice

Makani was a moonshot company trying to make airborne wind energy. They built a giant autonomous fixed wing aircraft that would fly in circles while generating electricity through its propellers and transmitting it to the ground through a wire tether. This approach had all sorts of theoretical advantages over stationary wind turbines:

By replacing a rigid tower with a tether, you could delete a huge amount of structural cost.

Unconstrained by a physical structure, Makani’s system could also fly at higher altitudes where wind speeds are greater and there’s more energy to be had.

The aircraft could dynamically adapt to a wider range of wind speeds. Conventional windmills have to be curtailed (turned off) when the wind gets too fast.

From watching their documentary, you can get a sense of the technological feats they achieved to just get something flying and generating power. This post from 2014 (and this one from 2015) detail the technical challenges they faced.

Imagine building an autonomous plane that has to do loop-de-loops at 100+ km/hour, take off and land vertically, survive storms, lightning, etc, and do that with zero failures for years on end. As this author points out, the aircraft probably can’t fly in subzero temperatures unless you de-ice the wings. It also probably can’t fly in extremely hot weather, when most commercial planes are unable to land.

Contrast the Makani system to wind turbines, which are big dumb propellers than can sit idly when problematic weather arises. Aside from putting solar panels in space, airborne wind seems like one of the most ambitious approaches you could cook up to generate electricity.

I believe Makani is a case where the theoretical merits blinded proponents to all of the mundane, real-world implementation problems. Even if they could get a prototype working, Makani faced massive safety and deployment challenges.

One of Makani’s biggest selling points was that they could capture more energy than windmills, and be sited in more places where wind turbines are uneconomical or can’t be built. But because the system has eight spinning blades on the end of a basically invisible and electrified tether, it can’t be sited anywhere near power lines, humans, roads, farms, etc. If you put blinking lights or other indicators on the tether, the system has too much drag to operate.

The tether-aircraft system occupies a large hemisphere that must be spaced out much further than typical wind farms for safety reasons. Because of this, Makani’s devices would need to have a ~40% higher capacity factor (operate 40% more hours per year) to offset their wider spacing (Source).

Last but not least, there’s the NIMBY problem. This 20 kW prototype is pretty loud, so imagine what a 5 MW system would sound like. Living next to an airborne wind farm would be like living next to an airport with 24/7 departures.

These practical limitations, not the idea itself, are the fatal flaw. Makani promised cheaper renewable energy that could be sited anywhere. In reality, they were building a niche technology that would probably only make sense in offshore locations far from any human activity.

Moving cost targets

When all is said and done, the only metric that really matters for a commodity like electricity is your levelized cost per kilowatt-hour. The person receiving electrons doesn’t know and probably doesn’t care how clever your approach is.

Could Makani compete on cost?

Even with their theoretical advantages, Makani needed to be an absolute slam dunk over wind turbines to stay cost-competitive. When Makani was founded in 2006, onshore wind cost $0.12/kWh and solar probably cost around ~$0.85/kWh (extrapolated based on this data).

When Makani was discontinued in 2020, onshore wind was 3x cheaper ($0.04/kWh) and solar was down to $0.06/kWh, at least an OOM cheaper! These learning curves for wind and solar are remarkable, but were not unpredictable.

All in all, I think Makani was a moonshot idea with theoretical advantages worth pursuing once. The idea, which was originally proposed in 1980, deserved one really good shot on goal. But now that we’ve let a talented Google X team navigate the idea maze, I think it’s time to double down on the simple, proven technologies we have.

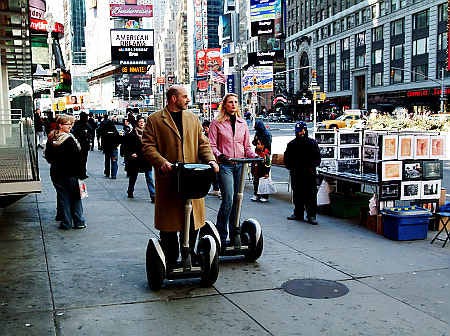

Segway, and collective action problems

Segway is another epic Silicon Valley fail. John Doerr predicted that it would be bigger than the Internet and Steve Jobs thought it could be as big as the PC. The company’s vision was to revolutionize cities by replacing cars with “empowered pedestrians.”

The self-balancing technology might have been a control algorithm innovation, but did Segways actually provide value to users? It cost ~$5k, was too big to ride on the sidewalk, probably a nuisance to everyone else in the bike lane, and not that safe (just search “Segway crash compilation” on Youtube).

When asked about the Segway in 2001, Steve Jobs said:

“If enough people see this machine, you won’t have to convince them to architect cities around it; it’ll just happen.”

This kind of magical thinking seems to be the fatal flaw of the Segway. You need a critical mass of people to decide that we should rebuild cities around an unproven device for it to become a safe and useful mode of transport. There is value in the aggregate, but not a clear value for the individual early adopter. I think a lot of failed ideas rely on value propositions of the form “once everyone does XYZ, the world will be so much better.”

The Metaverse, and nebulous value propositions

Speaking of failed ideas, what happened to the Metaverse? It was supposed to be an immersive virtual world where we could do everything. But a reported $36B later, there is little to show for the effort. In 2023, the “year of efficiency”, Meta laid off 10k+ people.

The core problem wasn't technical - Meta did build new VR hardware and software. Rather, the Metaverse suffered from a nebulous value proposition similar to Web3: it promised to revolutionize how we live and work, but couldn't articulate why people would actually want this revolution. Even Meta's own employees had to be forced to use Horizon Worlds, their flagship Metaverse platform.

The vision of attending virtual meetings as Wii-like avatars or socializing in the Metaverse solved no real pain points. If anything, it would add more friction. In my experience, any sufficiently large video call will have one or two people who run into audio or screen sharing problems. Now imagine if everyone has to take the call on a Meta headset? I never saw one or two killer use-cases that would make it worthwhile for a user to take a chance on an expensive new piece of hardware.

Theranos, and math that doesn’t check out

I won’t retell the story of fraudulent biotech company Theranos, since I think it’s pretty well known from the book Bad Blood and the subsequent Hulu show. What I am interested in is whether it was possible for a non-expert to assess that their extraordinary claims were impossible to achieve. I know basically nothing about blood testing, but can at least do back-of-the-napkin calculations.

The claim: Theranos’ basic claims were that they could perform 200+ tests from a single drop of blood ( which I’m assuming is ~35 microliters). Most blood tests seem to require on the order of 500-1000 microliters per test. With a single drop split 200 ways, Theranos’ device would have to do each test with at most 0.175 microliters of blood, a ~2,800x reduction in volume!1

Sampling error: If you’re measuring a mean value from a blood sample (e.g ferritin or white blood cell count) then there will be an unavoidable sampling error, since your small random sample may not be perfectly representative of all the blood in someone’s body. Indeed, the consensus seems to be that blood from a finger prick is highly variable and not representative of venous blood. The sampling error follows a 1/sqrt(n) relationship, where n is the sample size.

If you’re Theranos, using a 2800x smaller blood sample means that sampling error will be sqrt(2800) = ~53x higher! A 0.1% sampling error is now a 5.3% error, and a 1% sampling error is now a 53% error! This source of error cannot be mitigated by more precise sensors; it is an unavoidable consequence of using such a tiny random sample.

Scale effects: On top of the sampling error, other crazy things happen when you shrink the sample volume by 2800x. The surface area to volume ratio grows by a factor of ~14x, so more of your sample is probably interacting with the container/sensor it’s in. If your test uses spectrophotometry (measuring light absorbance), then you’ll have a ~14x smaller path length to absorb light through (cubed root of 2800). But the signal-to-noise ratio gets worse when you reduce the path length! This paper found that the signal to noise ratio of spectrophotometry decreases by ~2.5x when the path length was reduced by a factor of 4. I don’t want to overextrapolate, but it’s safe to say that less volume = worse signal-to-noise ratio.

Due to Theranos’ faked results, enthusiastic media coverage, general secrecy, and efforts to silence critics, it must have been really hard to assess what they were actually building. But the quick math above reinforces just how extraordinary their claims were, and extraordinary claims should have required extraordinary evidence. The fact that Theranos was unwilling to allow any independent analysis of their machines should have been an instant red flag, and I’m honestly baffled that they survived from 2003-2018.

Takeaways

How do loonshot ideas survive for so long? Theranos had a ~15 year run, Segway 19 years, and Makani about 14 years. Ginkgo Bioworks has been around since 2008, but has yet to produce a real breakthrough product with biology. The Metaverse was short-lived, but spent a small fortune that could have sustained even a large startup for decades.

I think that a big reason why big ideas go unchallenged is epistemic learned helplessness. Most of us (myself included) have been conditioned to accept that we’re not the expert on most things, so we shouldn’t trust our own intuitions outside of a narrow slice of the world. I think this is a mistake – you don’t think you need to be a Ph.D-level expert to assess the implausibility of many ideas2. Reading papers, doing some back-of-the-napkin arithmetic, and (now) talking to an AI can get you surprisingly far in idea space.

To conclude, what are the common failure modes of loonshots?

For some ambitious ideas, the math just doesn’t pencil out. As we saw above, the claims made by Theranos seemed all but impossible, short of several Nobel prize level breakthroughs. And if they were sitting on a breakthrough, wouldn’t they want to let the scientific community in on it to help build hype?

Some ideas have a lot of hype, but no clear value proposition for early users. The Segway might have been useful in a city built for Segways, the Metaverse might have been a cool place to hang out if all of your friends were already there.

For cases like Mighty and Makani, the tech might have been achievable, but the world was trending in a different direction. Mighty’s browser had to outrun improvements in PCs to maintain its value proposition. Makani had to undercut vastly simpler technologies (solar PV and wind turbines) on cost, even as they got 4x and 10x cheaper, respectively.

I think that every moonshot company owes the world a transparent answer to the following questions:

How will this technological achievement improve people’s lives?

Is this idea supported by calculations that don’t violate principles of physics, statistics, biology, etc?

What targets must be achieved for this idea to compete on cost?

Does the success of this idea hinge upon lots of other smart and motivated failing to achieve their vision? If so, why are they all wrong?

Why is there not a cheaper and simpler solution to the problem?

Does the idea rely on collective adoption by lots of people? Why is it valuable to user #1, #100, and #1M, and so on?

35 ul / 200 tests = 0.175 ul per test. Going from a 500 ul sample to a 0.175 ul sample is a 2,857x reduction in volume

Note that I’m not suggesting that you shouldn’t trust experts! Or that you should “do your own research” when it comes to vaccines, conspiracy theories, etc. I’m also not suggesting that the Dunning-Kruger effect isn’t a problem; we have plenty of overconfident non-experts in the world.